Over the last couple of months I have been coaching a number of PHP teams to help them improve their software engineering practices. The main goals were to improve the quality of the product, ease of delivery and the overall maintainability of the code. If there is one thing that defines maintainable code, in my opinion, it is the existence of unit tests. However, one of the things that proved more difficult than one might expect is to start writing proper unit tests in an existing PHP solution.

In this instance, the teams were using the Laravel framework. However, standard Laravel practices limited the testability of the code created by the teams. I have worked with these teams to make their code more testable to two ends:

- Improve overall testability by introducing new class design patterns

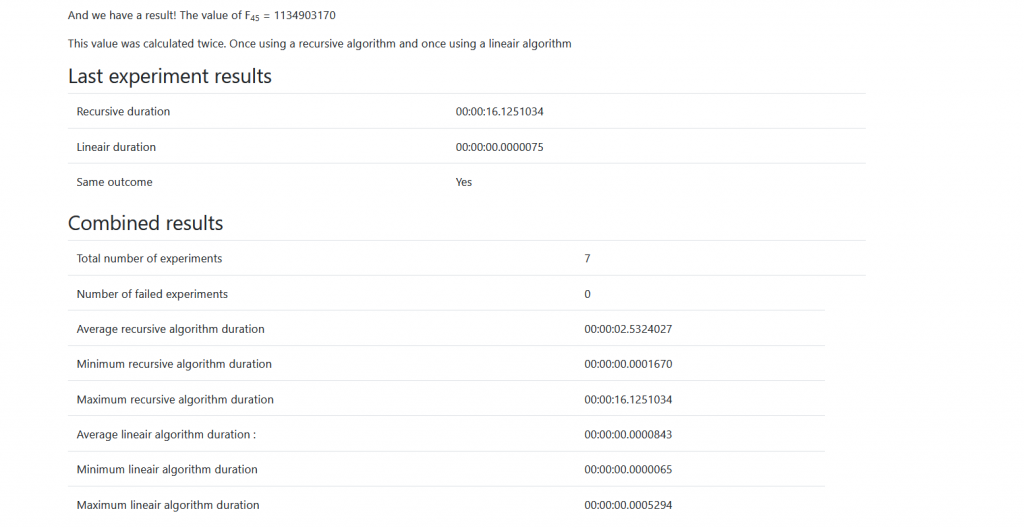

- Reduce the duration of tests. Prior to this approach, a lot of tests were implemented as end-to-end, interface based tests. And boy, are they slow!

After a number of weeks, we saw the first results coming in, so all of this worked out nicely.

The goal of this post is to share the issues found that were preventing the team from proper unit testing and how we got around them.

Issue 1: instantiating a class in a unit test

The first thing we ran into was the fact that it was impossible to instantiate any class from a unit test. There were two reasons for this. The first was that there was actual work done in the constructor of almost every class: calling a method on another class and/or hitting the database.

Next to this, dependencies for any class were not passed in via the constructor, but were created in the constructor using a standard Laravel pattern. The good news here is that Laravel actually provides you with a dependency container. The bad news is, that it was often used like this:

class TestSubject {

public function __construct() {

$this->someDependency = app()->make(SomeDependency::class);

}

}

This calls a global, static method app() to get the dependency container and then instantiates a class by type. Having this code in the constructor makes it completely impossible to new the class up from a unit test.

In short, we couldn’t instantiate classes in a unit test due to:

- Doing work in a constructor

- Instantiating dependencies ourselves

Solution: Let the constructor only gather dependencies

First of all, calling methods or the database was quite easy to refactoring out of the constructors. Also, this is a thing that can easily be avoided when creating new classes.

The best way to not instantiate dependencies yourselves, is to leave that to the framework. Instead of hitting the global app() method to obtain the container, we added the needed type as a parameter to the constructor, leaving it up to the Laravel container to provide an instance at runtime (constructor dependency injection.)

public function __construct(SomeDependency $someDependency) {

$this->someDependency = $someDependency;

}

Now there is still one issue here and that is we are depending upon a concrete class, not an abstraction. This means, we are violation the Dependency Inversion principle. To fix this, we need to depend on an interface. However, now the Laravel dependency container no longer knows which type to provide to our class when instantiating it, since it cannot instantiate a class. Therefore, we have to configure a binding that maps the interface to the class.

app()->bind(SomeDependencyInterface::class, SomeDependency::class);

Having done this, we can now change our constructor to look as follows.

public function __construct(SomeDependency $someDependency) {

$this->someDependency = $someDependency;

}

At this point we have changed the following:

- No work in constructors

- Getting dependencies provided instead of instantiating them ourselves.

- Depending upon abstractions

Mission accomplished! These things combined now allows to instantiate our test subject in a unit test as follows:

$this->dependencyMock = $this->createMock(SomeDependencyInterface::class);

$this->subject = new TestSubject($this-> dependencyMock);

Issue 2: Global static helper methods

Now we can instantiate a TestSubject in a test and start testing it. The second we got to this state, we ran into another problem that was all over the code base: global, static, helper methods. These methods have different sources. They are built-in PHP methods, Laravel helper methods or convenience methods from 3rd parties. However, they all present us with the same problems when it comes to testability:

- We cannot mock calls to global, static methods. Which means we cannot remove their behavior at runtime and thus cannot isolate our TestSubject and start pulling in real dependencies, dependencies of dependencies, etc…

From here on, I will share (roughly in order of preference) a number of approaches to get around this limitation.

Solution 1: Finding a constructor injection replacement

When starting to investigate these static methods, especially those provided by Laravel, we saw that a lot of them were just short wrapper methods around the Dependency Container. For example, the implementation of a much used view method was this:

public function view($name = null, $data = [], $mergeData = [])

{

$factory = app(ViewFactory::class);

if (func_num_args() === 0) {

return $factory;

}

return $factory->make($view, $data, $mergeData);

}

For all these convenience methods, it is straightforward to see that we can easily refactor the calling code from this:

public function __construct() { }

public function index() {

// … more code

return view(“blade.name”, $params);

}

To this:

public function __construct(ViewFactory $viewFactory) {

$this->viewFactory = $viewFactory;

}

public function index() {

// … more code

return $this->viewFactory->make(“blade.name”, $params);

}

A quick and easy way to remove a decent portion of calls to global functions.

Solution 2: Software engineering tricks

If there is no interface readily available for constructor injection, we can create one ourselves. A common engineering trick is to move unmockable code to a new class. We then inject this to our subject at runtime. At test time however, we can then mock this wrapper and test our subject as much as possible.

As an example, let’s take the following code:

class Subject {

function isFileChanged($fileName, $originalHash) {

$newHash = sha1($fileName);

return $originalHash == $newHash;

}

}

Of course we can test this class by letting it operate on a temporary file, but another approach would be to do this:

class Subject {

private $sha1Hasher;

public function __construct(ISha1Hasher $sha1Hasher) {

$this->sha1Hasher = $sha1Hasher;

}

function isFileChanged($fileName, $originalHash) {

$newHash = $this->sha1Hasher->hash ($fileName);

return $originalHash == $newHash;

}

}

Maybe not a thing you would do in this specific instance. But if you have code that is more complex and is executing a single call to a global method, this way you can move that call behind an interface and mock it out while testing:

public function testSubject() {

$hasherMock = $this->createMock(ISha1Hasher::class);

$hasherMock->method(“hash”)->with(“n/a”)->willReturn(“123”);

$subject = new Subject($hasherMock);

$result =$subject->isFileChanged(“n/a”, “123”);

$this->assertFalse($result);

}

In my opinion, solution 2 is by far a better approach to take than solutions 3 and 4. However, if you are afraid that adding to much types might clutter your codebase or reduce the performance of your application, there are two more approaches available. Both have drawbacks, so I would only use them if you see no other way.

Solution 3: Leveraging PHP namespace precedence

If refactoring global static calls in you code to a new class is not an option and your code is organized into namespaces, there is another way we can mock calls to built-in PHP methods. In the file with our TestClass, we can add a new method with the same name in a namespace that is closer to the caller.

For example, the following call to file_exists() cannot be mocked out:

namespace demo;

class Subject {

public function hasFile() {

return file_exists("d:\bier");

}

}

As you can see, the class containing the hasFile() method is in a namespace called demo. We can create a new method, also called file_exists() in that same namespace, just before our TestClass. When executing, the methods in the namespace that is the closed to the caller will take precedence.

This means, we mock the call to file_exists() to always return true, as follows:

namespace demo;

function file_exists($fileName) {

return true;

}

class TestClass {

public function testWhenFileExists_thenReturnTrue() {

$result = $this->subject->hasFile();

$this->assertTrue($result);

}

}

The main drawback of this approach is that it reduces the readability of your code. Also, relying on method hiding for testing purposes might make your code harder to understand for those that do not grasp all the language details.

Solution 4: Leveraging your frameworks and libraries

Finally, your framework might provide its own means for mocking certain calls. In Laravel for example, there is a construct of Facades that you can also use for mocking purposes. Another example is the Carbon datetime convenience library that provides a global static Carbon::setTestNow() method.

I for one would discourage this, as it would mean that you are writing logic that will become dependent on your framework and will not ever be able to switch to another framework without redoing everything. (However… who has done that even once?)

My other argument is one of taste: I simply do not like adding methods to production code, only to make it testable. And I have seen misuse of methods intended for tests only in production code as well…

However, if you do not share these feelings, the approach is quite nicely detailed here: https://laravel.com/docs/5.6/facades or here: http://laraveldaily.com/carbon-trick-set-now-time-to-whatever-you-want/

Conclusion

I hope that this blog gives you a number of approaches to make your PHP code more (unit)testable. Because we all know that only code that is continued tested in a pipeline, can quickly and easily be shipped fast and often to customers.

Enjoy!

With thanks for proofreading: Wouter de Kort, Alex Lisenkov